The greenhouse effect is a wicked combination of spectroscopy, radiation and collision dynamics superimposed on fluid flow. Gravity makes an appearance through the lapse rate, which among other things means that it is colder the higher you go and there are a few other things thrown in for the joy of it. Explaining it to your mom is not recommended for a family fun night. One of the associated issues is that increasing greenhouse gas concentrations in general, and CO2 in particular, does not increase the greenhouse warming in a simple linear way. Spencer Weart (popular version) and Ray Pierrehumbert (more detail) recently assayed an explanation on Real Climate.

Into the swamp wanders one Lubos Motl, a wanna be climate guru who is running for Bjorn Lomberg of the Czech Republic. Lubos then plays an elegant game of three card Monte with Richard Lindzen as the outside man on the short con. Watch the cards as you listen to the spiel. The trick here is to bury the switch into a morass of polemic and nuttiness. Rabett Labs has filmed the whole thing in slow motion, stripped out the flim to show you the flam. The rubber meets the road about a third of the way down

Into the swamp wanders one Lubos Motl, a wanna be climate guru who is running for Bjorn Lomberg of the Czech Republic. Lubos then plays an elegant game of three card Monte with Richard Lindzen as the outside man on the short con. Watch the cards as you listen to the spiel. The trick here is to bury the switch into a morass of polemic and nuttiness. Rabett Labs has filmed the whole thing in slow motion, stripped out the flim to show you the flam. The rubber meets the road about a third of the way downfocus on the gases and frequencies where the absorption rate significantly differs from 0% as well as 100% - will effectively eliminate oxygen and ozone. You end up with the standard gases - water, carbon dioxide, methane, and a few others.which are determined by the extra forcing from increased CO2 concentrations which raises the vapor pressure of water in the atmosphere. But Lubos goes onMoreover, you can use the approximation that the concentration of water in the atmosphere rapidly converges to values dictated by other quantities.

This is a low estimate of the increase in global temperature due ONLY to the direct effect of adding CO2 without amplifying feedbacks direct from Richard Lindzen. Low, but in bounds. And here comes the switch and there goes the Queen into the grifter's pocket. . .The conventional quantity that usually measures the strength of the greenhouse effect is the climate sensitivity defined as the temperature increase from a doubling of CO2 from 0.028% of the volume of the atmosphere in the pre-industrial era to 0.056% of the volume expected before 2100. Currently we stand near 0.038% of the volume and the bare theoretical greenhouse effect, including the quantum-mechanical absorption rates for the relevant frequencies and the known concentration, predicts a 0.6 Celsius degrees increase of temperature between 0.028% and 0.038%,

roughly in agreement with the net warming in the 20th century.The measured net warming INCLUDES the feedback from increased water vapor in the atmosphere due to warming of the ocean surface. Lucky Lubos covers it back up again

This bare effect can be modified by feedback effects - it can either be amplified or reduced (secondary influence on temperature-driven cloud formation etc.) - but it is still rather legitimate to imagine that the original CO2 greenhouse effect is the driving force behind a more complex processThe direct influence of CO2 concentration increases from the feedbacks AMPLIFY period. So let's see. The direct effect of doubling CO2 would be an increase in global temperature of 0.6 to 1 K. The total effect, the climate sensitivity, will be 2-5 K, with a best estimate of 3K (see the AR4 WGI and all over). And now, the fellow behind the cardboard box asks you to pick the shell with the pea under it

In the reality based community, we have had about 0.7 K of warming in the last century. That is 0.7/3.0 or about 30% of the warming predicted for a doubling, but because the Earth appears not to have re-established radiative equilibrium it is generally considered that there is an additional 0.5K of warming built even if greenhouse gas concentrations cease to grow. 1.2/3.0 = 40%.In terms of numbers, we have already completed 40% of the task to double the CO2 concentration from 0.028% to 0.056% in the atmosphere. However, these 40% of the task have already realized about 2/3 of the warming effect attributable to the CO2 doubling. So regardless of the sign and magnitude of the feedback effects, you can see that physics predicts that the greenhouse warming between 2007 and 2100 is predicted to be one half (1/3 over 2/3) of the warming that we have seen between the beginning of industrialization and this year. For example, if the greenhouse warming has been 0.6 Celsius degrees, we will see 0.3 Celsius degrees of extra warming before the carbon dioxide concentration doubles around 2100.

As Eli said, watch the switch. The observed warming must be compared to the 3K climate sensitivity which INCLUDES feedbacks. By comparing the observed warming to the much lower 0.6-1K estimate without feedbacks it appears that much more of the warming has already occurred, and that adding more CO2 will have little effect.

There, is, as there always is, much more. Anyhow, Lubos as part of his pitch tells us in detail that he is going to explain it all and starts by getting it wrong in strange ways. After a page or two of polemics, Motl starts out on the science ok, sort of, but quickly falls into two of the usual traps

The requirement that low-energy transitions must be allowed within the molecule is why the mono-atomic inert gases such as argon or even di-atomic molecules such as nitrogen are not greenhouse gases. Those absorbed infrared rays that are relevant for the greenhouse effect are quickly transformed to kinetic energy of the atmosphere and this energy is either re-emitted in the downward direction or it is not re-emitted at all.Molecules and Motl's basically don't know which end is up, so the energy is re-emitted in all directions, not only downward and although the thermal energy can be moved considerable distances by convection, it eventually has to be re-emitted to space. How the energy gets there in the end is what the greenhouse effect is all about. Lubos continues by showing a figure

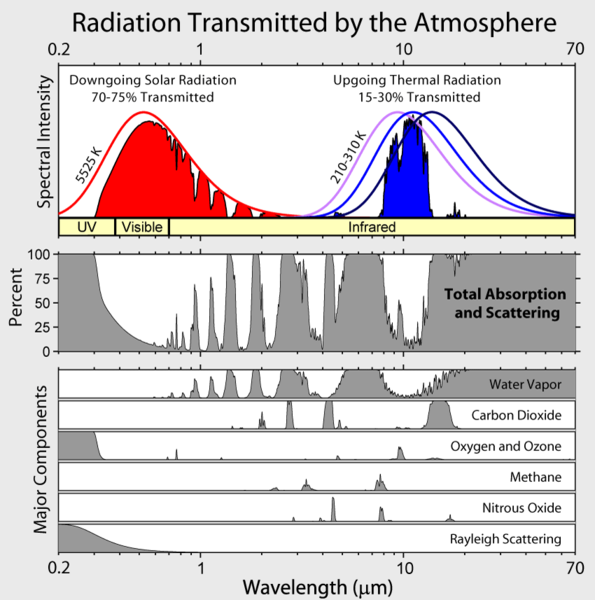

The meaning of the ordinate is not clear, but Lubos does provide a link to David Hodell's version at the University of Florida from a course on Global Change. Hodell, btw, does not agree with Motl's foolishness. We learn that Motl's unattributed graph (Eli thinks he has seen it elsewhere, but can't remember) really shows the percentage of incoming solar radiation at a particular wavelength that is absorbed by a molecule before hitting the surface (Hodell's abscissa is backwards from Motl's, Motl's spectra, wherever they came from are a bit higher resolution). The Wikipedia article on global warming provides the context that Lubos lacks

The meaning of the ordinate is not clear, but Lubos does provide a link to David Hodell's version at the University of Florida from a course on Global Change. Hodell, btw, does not agree with Motl's foolishness. We learn that Motl's unattributed graph (Eli thinks he has seen it elsewhere, but can't remember) really shows the percentage of incoming solar radiation at a particular wavelength that is absorbed by a molecule before hitting the surface (Hodell's abscissa is backwards from Motl's, Motl's spectra, wherever they came from are a bit higher resolution). The Wikipedia article on global warming provides the context that Lubos lacks UPDATE:7/2/2007 Lubos has now added this figure to his post. It was not originally there.

UPDATE:7/2/2007 Lubos has now added this figure to his post. It was not originally there.The sun's spectrum is a 5000 K black body with a whole bunch of sharp atomic lines in it. Ozone (305-195 nm) and O2 (<0.2>4 um) absorb UV light The emission from the surface is a smooth black body.

From the Wikifigure you can see that in the range where the earth emits IR, water vapor is the most important absorber, but CO2 plays an important role and tropospheric O3 also has an effect. (Note to the interested: The total emission from the sun is much higher than shown in the figure, but only a small fraction of the sun's light intersects the earth's orb and the average amount of energy hitting the surface is further decreased because the sun don't shine anywhere half the time on average and the fact that most places it hits the surface obliquely. This all works out to a difference of a factor of 4 less than the solar intensity hitting a disk held at right angles to the sun in orbit. Thus the relative intensities of sunlight falling on the surface and IR emission from the earth are as shown). Eli could niggle a bit more, both CO2 and H2O have strong absorption spectra below 200 nm which Lubos does not show (they don't play any significant role in absorbing solar radiation), etc. but it is more fun to look at the next lubosaloser

You can see that water is by far the most important greenhouse gas. We will discuss carbon dioxide later but you may also see that we have included oxygen and ozone, for pedagogical reasons. They don't have too many spectral lines but there is a lot of oxygen in the air, a thousand times the concentration of carbon dioxide! So you might think that the precise concentration of oxygen or ozone will be very important for the magnitude of the greenhouse effect, possibly more important than the concentration of carbon dioxide.Lubos has not quite figured out the difference between a vibrational band and a spectral line. Bands consist of lots of lines. The resolution of these figures is too poor resolve the lines. Ozone does contribute to the greenhouse effect, at least the ozone that we see in the troposphere and ozone has lots of lines in its vibrational spectrum. Oxygen (O2) has a lot of spectral lines, its just they are mostly below 300 nm (there are some very weak ones in the near IR and red, from forbidden triplet-singlet transitions which, as Lubos says, you can see because there is a lot of O2). Motl continues down the bunny trail

The reason why it's not true is that there is actually so much oxygen in the air that the radiation at the right frequencies is completely absorbed - 100% - while the radiation at wrong frequencies is of course not absorbed at all - 0%. At least ideally - when you neglect the collisional broadening and the Doppler width of the lines and other effects - it should be so. That's why the greenhouse effect of the oxygen doesn't depend on the concentration of oxygen in any significant way.Cod's wallop. The reason why O2 does not contribute to the greenhouse effect is that just like N2, it is homonuclear diatomic. While the oxygen and nitrogen molecules can vibrate, because of their symmetry these vibrations do not create any transient charge separation. Without such a transient dipole moment, they can neither absorb nor emit infrared radiation.

***************

On top of this there are language problems which are not Lubos' alone. I/Io is the ratio of the intensity of light after passing through some distance L of anything. I and Io are functions of wavelength. (Io-I)/Io x 100 is the percent transmitted. Transmission is almost always given as a percentage. Transmission must be between 100% and 0%. Absorption can be found from the Beer-Lambert law

I/Io = exp (-SNL)where S is the molecular absorption , N the concentration and L the path length. S can in principle take any value (negative values of S would correspond to situations in which there were emitters in the path, not absorbers in which case the transmission would be > 100 %). SNL is called the absorbance and is unitless.

People are throwing around the word saturation. Saturation has a specific meaning for optical transitions. For a simple two level system, when the population of the upper and lower states are equal, the probability of absorbing a photon which matches the energy difference between the states is the same as emitting a second photon (there are caveats) and the transition is said to be saturated.