Sometimes a bunny just has to answer a tweet nothing

.@MarkSteynOnline @MichaelEMann @gregladen @curryja @NobelPrize Ah, ACLU back to mission of defending Nazi scum? http://t.co/bplzT1MAi3

— eli rabett (@EthonRaptor) October 1, 2014

.@MarkSteynOnline @MichaelEMann @gregladen @curryja @NobelPrize Ah, ACLU back to mission of defending Nazi scum? http://t.co/bplzT1MAi3

— eli rabett (@EthonRaptor) October 1, 2014

Eli has been writing about the problems that sea level rise poses for the east coast of the US from Cape Hatarras north. North Carolina has chose the ostrich strategy driven by a blog scientist called John Droz. As Eli wrote, about the only thing that might save the situation are the fleeing insurers.

Eli has been writing about the problems that sea level rise poses for the east coast of the US from Cape Hatarras north. North Carolina has chose the ostrich strategy driven by a blog scientist called John Droz. As Eli wrote, about the only thing that might save the situation are the fleeing insurers.

They would search for a home in Norfolk to be near Josh's office. But it could not be in a flood plain, susceptible to rising seas, storm surge and escalating flood insurance prices.

Their decision is one glimpse into the changing dynamics of coastal real estate. A growing awareness of sea level rise and flooding, coupled with rising flood insurance premiums as the federal government phases out subsidies, has the potential to reshape segments of the Hampton Roads market.Sellers are having to alter houses to deal with the risk of flooding, such as moving basements to attics (or at least the heating and cooling plants)

Real estate agent Kathy Heaton found herself on the flip side of the growing concern, and perhaps at the forefront of a new trend.King Canute had it easy.

For months, the Nancy Chandler and Associates agent had been trying to sell a home in the desirable Norfolk neighborhood of Larchmont. The problem: Like many homes in that area, it's in a high-risk flood plain. Flood insurance could run up to $3,500 based on estimates she's seen.

That would add almost $300 to a monthly mortgage, an amount many buyers Heaton has encountered would rather put into the cost of a home.

Regular homeowners insurance does not cover flooding. Homes in the flood plains with mortgages are required by lenders to have insurance from the subsidized National Flood Insurance Program. It is struggling financially, and reforms are steadily increasing rates - about 18 percent a year - until they represent coverage of the true cost of the risk.

The specter of flood insurance is making the Larchmont home, assessed at around $270,000, nearly impossible to sell.

"We've probably had 35 showings, and everybody has walked out because of the flood insurance," Heaton said.

The home is not unique in a city penetrated by tidal creeks with some of the highest rates of sea level rise in the country, a combination of sinking land and rising water.

Nick Stokes has his teeth into the hockey stick for a while. Back in 2011 he explored Deep Climate's exposure of the Wegman hanky panky, Nick found that if you didn't do the cherry pick the results were much less hockey stick like for decentered PCs

Nick Stokes has his teeth into the hockey stick for a while. Back in 2011 he explored Deep Climate's exposure of the Wegman hanky panky, Nick found that if you didn't do the cherry pick the results were much less hockey stick like for decentered PCsBrandon Shollenberger responded by trying to move the goal posts. The selection by HS index used by Wegman had the incidental effect of orienting the profiles. That's how DC noticed it; the profiles, even if Mann's algorithm did what Wegman claimed, should have given up and down shapes. Brandon demanded that I should, having removed the artificial selection, somehow tamper with the results to regenerate the uniformity of sign, even though many had no HS shape to base such a reorientation on.

And so we see a pea-moving; it's now supposed to be all about how Wegman shifted the signs. It isn't; its all about how HS's were artificially selected. More recent stuff here. So now Steve McIntyre at CA is taking the same line. Bloggers are complaining about sign selection:"While I’ve started with O’Neill’s allegation of deception and “real fraud” related to sign selection,...". No, sign selection is the telltale giveaway. The issue is hockey-stick selection. 100 out of 10000, by HS index.Nick earlier had provided a simple explanation why only looking at PC1, as McIntyre does, leaves a distorted picture (Eli has made this point in the past) and today, well today more on flipped curves from both McIntyre and Moyhu

. . "as the difference between the 1902-1980 mean (the “short centering” period of Mannian principal components) and the overall mean (1400-1980), divided by the standard deviation – a measure that we termed its “Hockey Stick Index (HSI)”.If they had defined it as the absolute value of the difference BS and SM (well, Eli notices these things) might have a leg to stand on, but sorting on the defined HSI SM eliminated all negative going curves from their collection of 100. Accident or incompetence? Once again Eli notes that Steve McIntyre insists that he is incredibly precise. Eli reports, you decide.

In January, when Steven E. Koonin welcomed participants to the Climate Change Statement Review Workshop that he was chairing for the American Physical Society, he made a point of acknowledging “experts who credibly take significant issue with several aspects of the consensus picture.” Participating, and fitting that description, were climate scientists Judith Curry, Richard Lindzen, and John Christy. Now Koonin has published a high-visibility commentary in the Wall Street Journal under the headline “Climate science is not settled.” In the paper version, the editors italicized the word not.Ben Santer, Isaac Held and William Collins have now been officially declared chopped liver.

It can be added that Koonin has a long past in investigating and pronouncing on physics questions of special public importance. A quarter century ago, the article“Physicists debunk claim of a new kind of fusion” included this: “Dr. Steven E. Koonin of Caltech called the Utah report a result of ‘the incompetence and delusion of Pons and Fleischmann.’ The audience of scientists sat in stunned silence for a moment before bursting into applause.”. . .Now Eli, Eli wonders who wrote this press release. Not really, but implausible deniability is always useful. Suspicions are that something wonderful will come from NYU

Koonin’s 2000-word WSJ commentary dominates the front page of the Saturday Review section, with a jump to an interior page. The editors signposted it in several ways. The subhead says, “Climate change is real and affected by human activity, writes a former top science official of the Obama administration. But we are very far from having the knowledge needed to make good policy.” A call-out line in boldface on the front page says, “Our best climate models still fail to explain the actual climate data.” Another, after the jump to p. C2, says, “The discussion should not be about ‘denying’ or ‘believing’ the science.” A photo caption on the jump page says, in part, “Today’s best estimate of climate sensitivity is no more certain than it was 30 years ago.” A caption on the front page says, “While Arctic ice has been shrinking, Antarctic sea ice is at a record high.” (Although that photo plainly shows only the extraction of an ice-core sample, the caption adds, “Above, scientists measure the sea level in Antarctica.”)

I'd like to note that the author of the piece, Steven T. Corneliussen, also authored this nice write-up of the National Review attacking Neil deGrasse Tyson. It includes some nice notes about the Discovery Institute and Ann Coulter and reports these opinions as if they were just news. Not clear why this was deemed newsworthy to the Physics community. See how oddly it reads.Denunciations from Roger Jr. and Andy of such obvious issue advocacy are a bit late tody

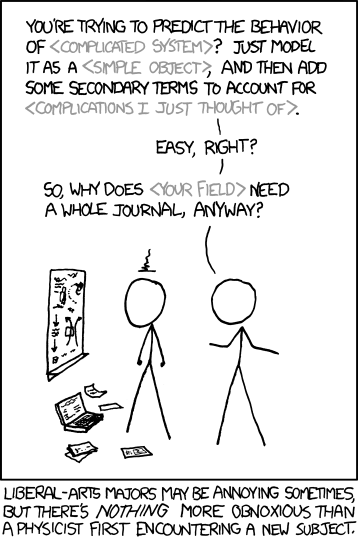

There is considerable unhoppiness about the APS. The arrogance of physicists was the obvious driver in setting the stage for the now appearing Steve Koonin cluster fuck. As Eli pointed out the members of the Panel on Public Affairs (POPA) drafting sub-committee were several courses short of a clue on climate science, even the physics parts of it. Ankh commented on how life imitates xkcd.

There is considerable unhoppiness about the APS. The arrogance of physicists was the obvious driver in setting the stage for the now appearing Steve Koonin cluster fuck. As Eli pointed out the members of the Panel on Public Affairs (POPA) drafting sub-committee were several courses short of a clue on climate science, even the physics parts of it. Ankh commented on how life imitates xkcd.Another source of real frustration is that Dr. Koonin had a real opportunity to listen. To consult experts in many different aspects of climate science. To do a deep dive into the science. To seek understanding of complex scientific issues. He did not make use of this opportunity. His op-Ed is not a deep dive - it is a superficial toe-dip into a shallow puddle, rehashing the same tired memes (the "warming hiatus" points toward fundamental model errors, climate scientists suppress uncertainties, there's a lack of transparency in the IPCC process, climate always varies naturally, etc.)

Even though the human influence on climate was much smaller in the past, the models do not account for the fact that the rate of global sea-level rise 70 years ago was as large as what we observe today—about one foot per century.

What are the facts?

In the period 1870-1924, the rate of sea-level rise was 0.8 mm/yr.

In 1925-1992, the rate was 1.9 mm/yr, and in 1993 -2014 the rate was 3.2 mm/y. So the rate has quadrupled in the last century, from 0.8 mm/yr to 3.2 mm/yr.

The rate data can be found here, from Sato and Hansen. (Data were last updated in May 2014).

Basic climate really is settled. While we don't know everything, we know enough. The rise of atmospheric carbon dioxide is causing problems now,and will cause bigger problems in the future.

Koonin doesn't think we know enough to set good climate policy.

He's wrong: policy should be on the supply side, phase out fossil fuels (petroleum, coal, natural gas) and substitute solar, wind, hydro and nuclear instead.

On the demand side, greater energy efficiency.

We don't know everything, but we know enough.

Sea-level rise was important in the $65B damages inflicted by Hurricane Sandy on the tri-state NY metro area (NY, NJ, CT) in 2012. Koonin is now at NYU, whose whose Langone Medical Center sustained $1.13B in damages, and the patients had to be evacuated.

Before NYU, Koonin was chief scientist at the oil company BP.

and as we all know, no hurricane has hit the US in like thousands of daysClimate action is tremendously important to me. As OMB Director, due to the wide-ranging effects that climate change is having – and will continue to have – it’s critical to our ability to operate and fund the government in a responsible manner.

From where I sit, climate action is a must do; climate inaction is a can’t do; and climate denial scores – and I don’t mean scoring points on the board. I mean that it scores in the budget – climate denial will cost us billions of dollars. The failure to invest in climate solutions and climate preparedness doesn’t get you membership in a Fiscal Conservatives’ Caucus – it makes you a member of the Flat Earth Society. Climate denial doesn’t just fly in the face of the overwhelming judgment of science – it is fiscally foolish. And while we cannot say with certainty that any individual event is caused by climate change, it is clearly increasing the frequency and intensity of several kinds of extreme weather events. The costs of climate change add up and ignoring the problem only makes it worse.

Today-2014 Atlantic hurricane is half over

Streak without intense hurricane landfall now 3244 days

Long-term trends: http://t.co/6TmPhcQuvT

— Roger Pielke Jr. (@RogerPielkeJr) September 10, 2014

But it’s also personal – as a native New Yorker, Superstorm Sandy brought home the impact of extreme weather. New York is where I grew up. It’s where I got married. And it’s where my children were born and raised for most of their lives.and Aunt Judy tells us that the pause is in the stadium wave pudding

But after Superstorm Sandy, it was where hundreds of homes were turned into piles of debris; mom and pop businesses were left submerged in water; and roads – including the one I had taken my driving test on – were wiped out. One hundred sixty people lost their lives. One of them was a daughter of a family friend. She was just 24 years old.<

As we all know, the storm also carried a hefty price tag: it caused $65 billion in damages and economic losses. Nine million homes and businesses lost power. Over 650,000 homes were damaged or completely destroyed. To help recover and rebuild, the Federal Government provided over $60 billion in much needed relief funding to affected areas.

#stadiumwave? MT @curryja: New post @ Climate Etc. - Trust, and don't bother to verify http://t.co/YlzAGXa5uW

— O. Bothe (@geschichtenpost) October 18, 2013

But, let’s get some facts straight about the continued costs if we don’t act.Still, the future is bright

Thirteen of the 14 warmest years since good records became available in the late 19th century have occurred since 2000.

Watts Up With That? http://t.co/EgkDjPYk9B

Excerpt: So what harm does it do if Tyson makes up stories to fit h... http://t.co/dJmu0aGH7u

— Weather Goddess 7 (@Channel7Weather) September 19, 2014

Looking ahead, leading estimates suggest that if we see warming of 3° Celsius above preindustrial levels, instead of 2°, we could see additional economic damages of approximately 0.9 percent of global output per. Our Council of Economic Advisers puts this figure into perspective – 0.9 percent of estimated 2014 U.S. GDP is approximately $150 billion.Well, Shaun, you could cut the pipeline off at the knees.

Within the next 15 years, higher sea levels combined with storm surge and potential changes in hurricane activity are projected to increase the average annual cost of coastal storms along the Eastern Seaboard and the Gulf of Mexico by $7 billion, bringing the total price tag for coastal storms to $35 billion each year.

According to the National Climate Assessment, climate change has made the fire season in the United States longer, and on average, more intense. Funding needed for federal wildland fire management has tripled since 1999—averaging over $3 billion annually. In a world of finite budgets, greater fire suppression costs have left less money available for forest management and fire preparedness. So we spend what we have to in order to put out the fires, and underinvest in the tools that can help mitigate them – only leading to higher costs in the future.

And then there’s the devastating impact of drought in recent years. In 2012, we experienced what NOAA has called the country’s most extensive drought in more than 50 years, racking up $30 billion in damages. This year, California has been facing its third worst drought in recorded history, with a projected cost to the State’s economy of $2.2 billion and more than 17,000 jobs.

The bill to all of us, as taxpayers, is going up too: For example, in January, when the 2014 Farm Bill passed, CBO estimated that agricultural disaster assistance payments would total just under $900 million this year. But due to the severity of the drought, USDA has already spent $2.6 billion this year—three times what the CBO estimated would occur in a more typical year. And crop insurance payouts in the aftermath of the 2012 drought totaled more than $17 billion.

Now, when you consider the impact of climate change on the Federal Budget, it’s bad news for everyone. Even a small reduction in real GDP growth can dramatically reduce Federal revenue, drive up our deficits, and impact the government’s ability to serve the public.

Now, I’ve painted a pretty grim picture. But we are not powerless.

Here’s maybe the crucial point : if you think what the other person says is absurd, just stop. There’s no way you can have a good faith discussion with someone who you think is talking nonsense.

Electric vehicles, if they are charged by green electricity, can reduce carbon emissions. Battery technology is a key factor holding back electric cars. Physics Nobel laureate Burton Richter in his admirable 2010 book, Beyond Smoke and Mirrors: Climate change and Energy in the 21st Century, recommends more research into battery technology.

Accordingly, there were national as well as local issues at stake this week, when the Nevada Legislature met in special session. They voted unanimously to give the Tesla company $1.3 B in tax breaks as incentive to build a $5B Gigafactory (battery factory) near Reno. Tesla claims the factory will create 6,500 new jobs, which works out to $200,000 per job.

I hope they got some of that in writing, because verbal promises are worthless. If Tesla ends up creating only half the number of jobs that it touted, are the tax breaks cut in half also?

Nevada's governor, Brian Sandoval, kept the legislature in the dark until the special session met, and presented it to the legislature as a take-it-or-leave-it deal.

Tesla was negotiating with other states besides Nevada, and was in a position to drive a hard bargain. It took a stupendous mount of bribery ($1.3 B works out to $471 per Nevada resident) to get the factory in Nevada.

Skeptics think that Tesla stock is the latest bubble stock. Insiders at Panasonic, VW, and Daimler have expressed skepticism. Analysts say that the factory will only be profitable if it can reduce battery manufacturing costs by 30% from present levels, and must sell 500,000 cars per year. Last year, Tesla sold under 25,000 cars. The company is not currently profitable.

Critics of the deal came from the right and left. The right was represented by the NPRI , Nevada Policy Research Institute, which claims it supports government "transparency". However, NPRI refuses to disclose its funders, so their funding sources remain officially secret (but widely viewed as a front group for at least one big casino.) NPRI thought the governor's calculations of the benefits of the factor were too optimistic. The left was represented by the NPLA, Nevada Progressive Leadership Alliance, which was concerned with funding vital government services. In the short run at least, the result will be a demand for government services for schools, police, fire, roads, etc, but without any additional tax revenues.

Governor Sandoval claims that Nevada will benefit by $100 Billion over the next 20 years, even after the tax breaks. Even if it doesn't happen, he'll still be OK. The factory won't be running before 2017 at the earliest, and Sandoval is widely touted as a potential vice-presidential Republican candidate for the 2016 election cycle.

I wrote to my state legislators, pointing out the price tag of $200,000 per job. I asked politely if I form a company and create five jobs, do I get a million dollars in tax breaks? I will let my faithful readers at Rabett Run when and if I hear anything. Don't hold your breath.

Or does it? Adding to the madness, now there is scientific uncertainty about the actual extent of the ozone problem as it relates to CFCs. More recent science has shown that the sensitivity of the Earth’s ozone layer might very well be 10 times less than was originally believed back in the 1980s when the alarm was first sounded. As reported in the prestigious science journal Nature, Markus Rex, an atmospheric scientist at the Alfred Wegener Institute for Polar and Marine Research in Potsdam, Germany, found that the breakdown rate of a crucial CFC-related molecule, dichlorine peroxide (Cl2O2), is almost an order of magnitude lower than the currently accepted rate:

“This must have far-reaching consequences,” Rex says. “If the measurements are correct we can basically no longer say we understand how ozone holes come into being.” What effect the results have on projections of the speed or extent of ozone depletion remains unclear.before, well, before. That link goes back to 2007, almost a pause ago, and is not to a scientific paper, but a news report, one which quotes Rex. What this is all about is a claim by Pope et al from JPL that the absorption cross-section of ClO-OCl (aka the ClO dimer or Cl2O2) was much smaller than had previously been measured. This would mean that the rate at which broke apart (photolyzed or photodissociated) after absorbing a UV photon was much slower. Thus there would be much less ClO and Cl available to participate in the catalytic destruction of ozone.

ClOOCl + hv --> Cl + ClOOTo understand how

ClOO + M --> Cl + O2+M

and two of the Cl atoms react with two ozone molecules

Cl + O3 --> ClO + O2

Other groups have yet to confirm the new photolysis rate, but the conundrum is already causing much debate and uncertainty in the ozone research community. “Our understanding of chloride chemistry has really been blown apart,” says John Crowley, an ozone researcher at the Max Planck Institute of Chemistry in Mainz, Germany.and of course, some of the debate and uncertainty lead to new experimental measurement, but that takes a while, and a short while longer to get published. In 2009, Eli commented on a paper from the Academica Sinica in Taiwan that conclusively showed Pope et al to be wrong. Of course there was more, most importantly a paper by Burkholder's group (Papanastasiou, et al) at the NOAA Boulder lab (open version sort of).

The UV photolysis of Cl2O2 (dichlorineperoxide) is a key step in the catalytic destruction of polar stratospheric ozone. In this study, the gas-phase UV absorption spectrum of Cl2O2 was measured using diode array spectroscopy and absolute cross sections, σ, are reported for the wavelength range 200-420 nm. Pulsed laser photolysis of Cl2O at 248 nm or Cl2/Cl2O mixtures at 351 nm at low temperature (200-228 K) and high pressure (∼700 Torr, He) was used to produce ClO radicals and subsequently Cl2O2 via the termolecular ClO self-reaction. The Cl2O2 spectrum was obtained from spectra recorded following the completion of the gas phase ClO radical chemistry. The spectral analysis used observed isosbestic points at 271, 312.9, and 408.5 nm combined with reaction stoichiometry and chlorine mass balance to determine the Cl2O2 spectrum. The where the quoted error limits are 2σ and include estimated systematic errors. The Cl2O2 absorption cross sections obtained for wavelengths in the range 300-420 nm are in good agreement with the Cl2O2 spectrum reported previously by Burkholder et al. (J. Phys. Chem. A 1990, 94, 687) and significantly higher than the values reported by Pope et al. (J. Phys. Chem. A 2007, 1, 4322). A possible explanation for the discrepancy in the Cl2O2 cross section values with the Pope et al. study is discussed. Representative,atmospheric photolysis rate coefficients are calculated and a range of uncertainty estimated based on the determination of σCl2O2(λ) in this work. Although improvements in our fundamental understanding of the photochemistry of Cl2O2 are still desired, this work indicates that major revisions in current atmospheric chemical mechanisms are not required to simulate observed polar ozone depletion.And what is the possible problem with the Pope study, well, turns out that they were measuring the absorption of ClO dimer in the same region where Cl2 absorbs (btw 300 and 400 nm, kind of bell shaped). As the abstract discusses, the method of production ClO dimer also produces Cl2 as a by product, and thus you have to know how much Cl2 there is in the mixture you are measuring. Papanastasiou, et al think that Pope et al got this slightly wrong (4.5%).

September 1, 2014

Scripps Institution of Oceanography at UC San Diego today announced that Wendy and Eric Schmidt have provided a grant that will support continued operation of the renowned Keeling Curve measurement of atmospheric carbon dioxide levels. The grant provides $500,000 over five years to support the operations of the Scripps CO2 Group, which maintains the Keeling Curve.

CO2 Group Director Ralph Keeling said the grant will make it possible for his team to restore atmospheric measurements that had been discontinued because of a lack of funding, address a three-year backlog of samples that have been collected but not analyzed, and enhance outreach efforts that educate the public about the role carbon dioxide plays in climate.

"I'm very grateful to be able to return to doing science and being attentive to these records. When it comes to tracking the rise in carbon dioxide, every year is a new milestone. We are still learning what the rise really means for humanity and the rest of the planet,” said Keeling.

Wendy Schmidt, co-founder with her husband of The Schmidt Family Foundation and The Schmidt Ocean Institute, said “The Scripps CO2 Project is critical to documenting the atmospheric changes on our planet and the Keeling Curve is an essential part of that tracking process. As government funding for science in general is decreasing, Eric and I are delighted to work with Scripps to help it continue its benchmark CO2 Project.”

The Schmidt Family Foundation advances the development of renewable energy and the wiser use of natural resources and houses its grant-making operation in The 11th Hour Project, which supports more than 150 nonprofit organizations in program areas including climate and energy, ecological agriculture, human rights, and our maritime connection.

In 2009, the Schmidts created the Schmidt Ocean Institute (SOI), and in 2012 launched the research vessel Falkor as a mobile platform to advance ocean exploration, discovery, and knowledge, and catalyze sharing of information about the oceans.

In keeping with the couple’s commitment to ocean health issues, Wendy Schmidt has partnered with XPRIZE to sponsor the $1.4 million Wendy Schmidt Oil Cleanup XCHALLENGE, awarded in 2011, and the Wendy Schmidt Ocean Health XPRIZE, a prize that will respond to the global need for better information about the process of ocean acidification. It will be awarded in 2015.

The Keeling Curve has made measurements of carbon dioxide in the atmosphere at a flagship station on Hawaii’s Mauna Loa since 1958. In addition, the Scripps CO2 Group measures carbon dioxide levels at several other locations around the world from Antarctica to Alaska. The measurement series established that global levels of CO2, a heat-trapping gas that raises atmospheric and ocean temperatures as it accumulates, have risen substantially in the past century. From a concentration that had never risen above 280 parts per million (ppm) before the Industrial Revolution, CO2 concentrations had risen to 315 ppm when the first Keeling Curve measurements were made. In 2013, concentrations at Mauna Loa rose above 400 ppm for the first time in human history and likely for the first time in 3-5 million years. Multiple lines of scientific research have attributed the rise to the use of fossil fuels in everyday activities.

The measurement series has become an icon of science with its steadily rising seasonal sawtooth representation of CO2 levels now a familiar image alongside Watson and Crick’s double helix representation of DNA and Charles Darwin’s finch sketches. Keeling Curve creator Charles David Keeling, Ralph Keeling’s father, received several honors for his work before his death in 2005, including the National Medal of Science from then-President George W. Bush.

The value of the Keeling Curve has increased over time, making possible discoveries about Earth processes that would have been extremely difficult to observe over short time periods or with only sporadic measurements. For instance, in 2013, researchers discovered that the annual range of CO2 levels is increasing. This finding may point to an increase in photosynthetic activity in response to a greater availability of a key nutrient for plant life.

Nuances in Keeling Curve measurements have similarly identified the global effects of events like volcanic eruptions, influences that would have been difficult to discern if measurements were made infrequently or periodically suspended. In addition, the Keeling Curve helps researchers understand the proportion of carbon dioxide being absorbed by the oceans, which in turn helps them estimate the pace of phenomena such as ocean acidification. In the past decade, scientists have come to widely study the ecological effects of acidification, which happens as carbon dioxide reacts chemically with seawater.

The Keeling Curve could eventually serve as a bellwether revealing the progress of efforts to diminish fossil fuel use. Save for seasonal variations, the measurement has not trended downward at any point in its history.This is indeed good news and praise is due the Schmidts, but $500K for 5 years is about one NSF grant, and Scripps and Ralph Keeling still deserve the bunnies support.

- Robert Monroe

However, the new research shows worldwide emissions of CCl4 average 39 kilotons per year, approximately 30 percent of peak emissions prior to the international treaty going into effect.

In addition to unexplained sources of CCl4, the model results showed the chemical stays in the atmosphere 40 percent longer than previously thought. The research was published online in the Aug. 18 issue of Geophysical Research Letters.Geez, even tho it is a press release they could have provided a link. To Eli this is the most important of the results in the paper. If one simplistically looks at the problem as a one box model, to explain the slower than expected falls in CCl4 there are two possibilities, either the destruction rate is slower than expected or the emissions rate is higher or some combination of the two. The problem with deciding which is which, is that the two interact. If your lifetime is too short it will look like the amount of emissions are high, and if the lifetime is too long the amount of emissions will look too low. Liang et al have a nice way of showing this

Figure 2. CCl4 global mean trend (ppt/yr) as a function of total lifetime and emissions from the two-box model (gray contours). Purple contours indicate the emissions and τCCl4 ranges that yield IHGs within the observed 1.1–2.0 ppt range (2-σ) between 2000 and 2012, using the current best estimate EFn of 0.94. Red (Advanced Global Atmospheric Gases Experiment (AGAGE)-based) and blue (GMD-based) numbers show emissions and lifetimes derived using the observed IHG and trend for individual years (2000–2012). The dark (light) gray shading outlines the range of emissions and τCCl4 that can be reconciled with the observations for EFn of 0.94 (0.88–1.00). The black diamond symbol shows our current best estimate for τ (thick and thin red bars indicate 1-σ and 2-σ uncertainties, respectively) and the upper limit bottom-up potential emissions for 2007–2012 (thick blue bar shows 1-σ variance) with 1-σ uncertainty shown in black-hatched shading.IHG- Inter hemispherical gradient EFn - fraction of emissions in the Northern Hemisphere.